What is data splitting and why is it important?

What is data splitting?

Data splitting is when data is divided into two or more subsets. Typically, with a two-part split, one part is used to evaluate or test the data and the other to train the model.

Data splitting is an important aspect of data science, particularly for creating models based on data. This technique helps ensure the creation of data models and processes that use data models — such as machine learning — are accurate.

How data splitting works

In a basic two-part data split, the training data set is used to train and develop models. Training sets are commonly used to estimate different parameters or to compare different model performance.

The testing data set is used after the training is done. The training and test data are compared to check that the final model works correctly. With machine learning, data is commonly split into three or more sets. With three sets, the additional set is the dev set, which is used to change learning process parameters.

There is no set guideline or metric for how the data should be split; it may depend on the size of the original data pool or the number of predictors in a predictive model. Organizations and data modelers may choose to separate split data based on data sampling methods, such as the following three methods:

- Random sampling. This data sampling method protects the data modeling process from bias toward different possible data characteristics. However, random splitting may have issues regarding the uneven distribution of data.

- Stratified random sampling. This method selects data samples at random within specific parameters. It ensures the data is correctly distributed in training and test sets.

- Nonrandom sampling. This approach is typically used when data modelers want the most recent data as the test set.

With data splitting, organizations don’t have to choose between using the data for analytics versus statistical analysis, since the same data can be used in the different processes.

Common data splitting uses

Ways that data splitting is used include the following:

Data splitting in machine learning

In machine learning, data splitting is typically done to avoid overfitting. That is an instance where a machine learning model fits its training data too well and fails to reliably fit additional data.

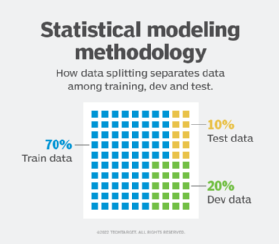

The original data in a machine learning model is typically taken and split into three or four sets. The three sets commonly used are the training set, the dev set and the testing set:

- The training set is the portion of data used to train the model. The model should observe and learn from the training set, optimizing any of its parameters.

- The dev set is a data set of examples used to change learning process parameters. It is also called the cross-validation or model validation set. This set of data has the goal of ranking the model’s accuracy and can help with model selection.

- The testing set is the portion of data that is tested in the final model and is compared against the previous sets of data. The testing set acts as an evaluation of the final mode and algorithm.

Data should be split so that data sets can have a high amount of training data. For example, data might be split at an 80-20 or a 70-30 ratio of training vs. testing data. The exact ratio depends on the data, but a 70-20-10 ratio for training, dev and test splits is optimal for small data sets.

Learn more about building machine learning models and the seven steps involved in the process here.