How to detect deepfakes manually and using AI

AI is both a blessing and a curse.

It is a blessing because AI-generated content can help people learn and work more efficiently. But AI is a curse when it comes to deceptions such as deepfakes.

Deepfake technology is a type of AI engineered to create or modify content to generate convincing yet completely counterfeit images, videos or audio recordings. While some AI content can be useful and completely benign, deepfakes are often created to mislead and misinform and can cause major cybersecurity issues, such as identity theft and phishing scams.

The market is still sorting out the legality of AI-generated content, but thorny privacy, defamation, intellectual property infringement and general misrepresentation issues have already materialized. That’s why it’s so important to be aware of deepfakes and learn how to spot them.

Let’s examine how to detect deepfakes and discuss some manual and automated methods designed to help stop the spread of bogus content.

Hints to spot a deepfake

While no single deepfake identification technique is foolproof, using several of the following manual deepfake detection methods can often help identify the likelihood that a multimedia file is indeed a deepfake.

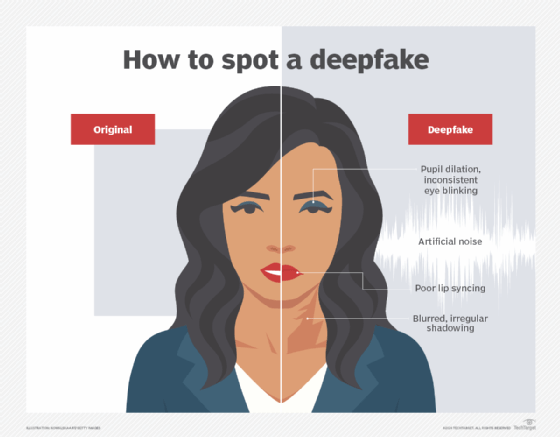

Facial and body movement

For images and video files, deepfakes can still often be identified by closely examining participants’ facial expressions and body movements. In many cases, there are inconsistencies within a person’s human likeness that AI cannot overcome. When viewing these images, the human brain generates a negative emotional response — dubbed the uncanny valley.

Lip-sync detection

When video is matched with altered audio in the form of spoken voice, it’s likely there is mismatched synchronization in how words are projected. Pay close attention to lip movements that might illuminate these discrepancies.

Inconsistent — or lack of — eye blinking

For now, AI has difficulty simulating eye blinking. As a result, deepfake algorithms tend to deliver inconsistent blinking patterns or eliminate eye blinking altogether. If you pay close attention, the eyes are the window to quickly identifying an altered video.

Irregular reflections or shadowing

Deepfake algorithms often do a poor job of recreating shadows and reflections. Look closely at reflections or shadows on surrounding surfaces, in the backgrounds or even within participants’ eyes.

Pupil dilation

Dilation is much more challenging to identify unless the video is presented in high resolution. In most cases, AI does not alter the diameter of pupils, leading to eyes that appear off. This is especially true if the subject’s eyes are focusing on objects that are either close or far away or must adjust to multiple light sources. If you are watching subjects whose pupils aren’t dilating naturally, that’s a sign that the video is a deepfake.

Artificial audio noise

Deepfake algorithms often add artificial noise, or artifacting, to audio files to mask changes in audio.

How to detect fake content with AI

As deepfake creation technologies continue to improve, it will become more difficult to determine if content has been altered. But AI can also be used to detect AI-generated deepfakes. And the good news here is, even as deepfake creation evolves, so too will AI-powered deepfake detection technologies.

Several detection tools are available today that ingest large sets of deepfake images, video and audio. Through machine learning and deep learning, the data is analyzed to identify unnatural patterns that signify the content has been artificially created.

The following are two additional ways that AI can be used to automatically spot deepfakes:

- Source analysis. Identifying the source of a multimedia file can be a giveaway that it has been altered. The challenge is that file source analysis is a daunting task when using manual methods. Deepfake detection algorithms can respond much more thoroughly and rapidly as they analyze file metadata to ensure a video is completely unaltered and authentic.

- Background video consistency checks. It used to be easy to identify a deepfake by its background. But, today, AI tools have progressed to a point where they are increasingly capable of altering backgrounds so they look complexly authentic. Deepfake detectors can pinpoint altered backgrounds by performing highly granular checks at multiple points to identify changes that might not be picked up by the human eye.

Andrew Froehlich is founder of InfraMomentum, an enterprise IT research and analyst firm, and president of West Gate Networks, an IT consulting company. He has been involved in enterprise IT for more than 20 years.